- Home

- Big Data Testing Services

Big Data Testing Services

Facing data integrity, performance bottlenecks, or quality assurance challenges? Our expert team delivers tailored solutions aligned with your unique business objectives.

Why Do You Need Big Data Testing?

Ensures Data Quality: Big data testing service guarantees accurate and reliable data for decision-making.

Saves Costs: Reduces expenses by helping optimize data processing and storage.

Optimizes Performance: Ensures your systems can efficiently handle large volumes of data.

Supports Smart Decisions: Improves business decisions through high-quality data insights.

Supports Security and Compliance: Keeps your data safe and ensures compliance with legal standards.

Mitigates Risks: Detects potential issues that could lead to data failures and operational downtime.

Types of Big Data Testing Services

Architecture Testing

It helps ensure that data processing is proper and meets the business requirements.

Database Testing

Validates that data gathered from various sources is stored and extracted from the databases in a correct format.

Performance Testing

Ensures stable performance of big data applications by checking loading and processing speed.

Integration Testing

This involves testing the integration between various components of the Big Data system and external systems and applications.

Functional Testing

Includes tests for all the sub-components, scripts, programs, & tools used for storing, loading, and processing applications.

Data Processing Testing

Big Data Testing Services verify the accuracy and efficiency of data transformation and manipulation processes, including data cleaning, normalization, aggregation, and enrichment.

Data Storage Testing

Assessing the integrity, security, and accessibility of data stored in databases, data warehouses, or other storage systems, including testing for data consistency, indexing, and retrieval.

Results From Implementing Big Data Testing Services

Enhanced Data Quality And Accuracy

Big data testing helps to ensure data accuracy, completeness, and consistency at every step, from ingestion to analysis.

Reduced Risk Of Errors And Biases

Big data testing proactively mitigates risks like errors and biases by scrutinizing algorithms, validating data integrity, and preventing faulty results from influencing your decisions.

Improved Performance And Efficiency

It identifies bottlenecks, optimizes processing algorithms, and fine-tunes resource allocation.

Increased Confidence In Data-Driven Decisions

Big data testing equips you with the confidence to trust your insights, knowing they're built on a solid foundation of quality and accuracy.

Enhanced Compliance And Security

It helps you verify compliance with regulations and secure sensitive information.

Our Approach

Requirement Analysis

Define testing scope based on project needs.

Test Planning

Develop a strategic approach detailing methodologies and tools.

Test Case Development

Construct targeted test scenarios for comprehensive coverage.

Test Execution

Implement tests and log any issues.

Result Analysis And Recommendations

Offer insights and actionable improvements.

Change Implementation Support

Assist in applying test feedback.

Technology Stack

Apache Hadoop

An open-source framework that enables the storage and processing of vast datasets across distributed systems.

Apache Spark

A high-speed and versatile cluster computing system tailored for real-time data processing.

HP Vertica

Engineered as a columnar database management system, HP Vertica excels in rapid querying and analytical tasks within the realm of Big Data.

HPCC

A scalable supercomputing platform for Big Data testing, supporting data parallelism and offering high performance. Requires familiarity with C++ and ECL programming languages.

Cloudera

A powerful tool for enterprise-level technology testing, including Apache Hadoop, Impala, and Spark. Known for its easy implementation, robust security, and seamless data handling.

Cassandra

Cassandra is a reliable open-source tool for managing extensive data on standard servers. It features automated replication, scalability, and fault tolerance.

Storm

A versatile open-source tool for real-time unstructured data processing that is compatible with various programming languages. Known for its scalability, fault tolerance, and wide range of applications.

Why Choose the Luxe Quality for Big Data Testing Services?

At our quality assurance services company, we combine experience and the latest technology for the best results.

Quick Start

We can immediately provide a highly skilled specialist for big data testing after signing the contract.

Free Trial

Experience our QA services with a 40-hour trial. Evaluate the capabilities of our company and see how it works.

Own Training Center

Our engineers have up-to-date technologies and can find an approach to any project.

Flexibility

We offer adaptability in personnel selection and resource allocation. Our highly skilled QA audit experts provide recommendations for product improvement.

Transparency & Trust

We know you love your product and care about your intellectual property, so we are ready to sign an NDA if required and provide prompt reporting on all our work.

40 hours of free testing

Luxe Quality has a special offer tailored for potential long-term customers who are interested in starting a pilot project.

We are offering our software testing and QA for free for the first 40 hours.

Case Studies

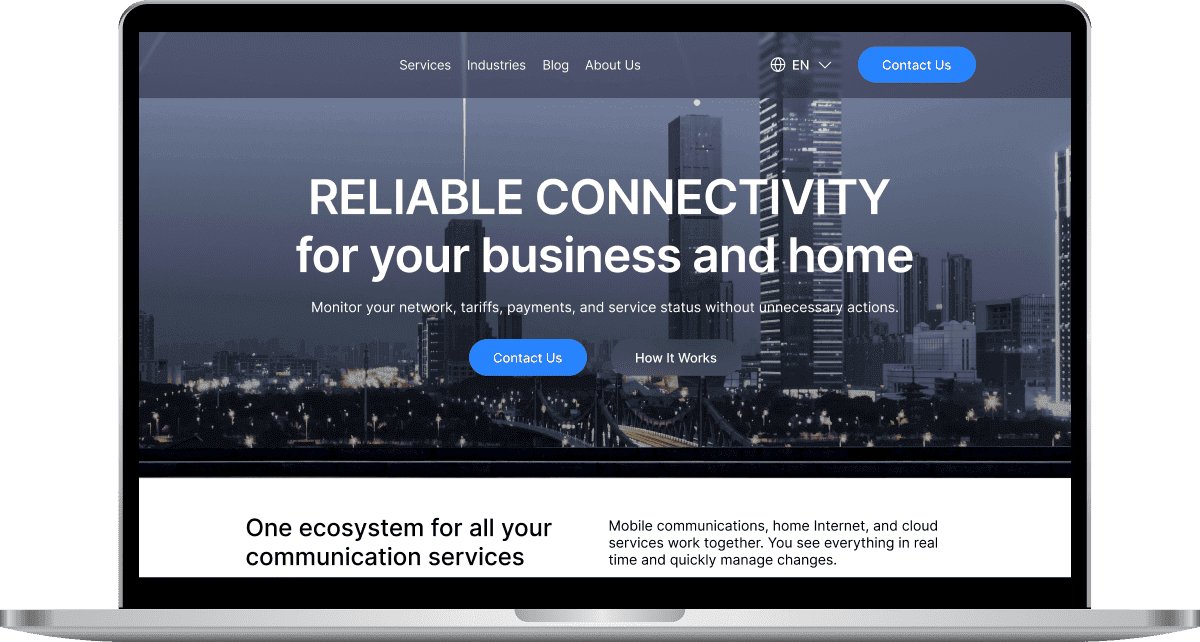

More projectsDigital Connectivity Company

USA

•Web, Mobile

About project:

A digital connectivity company offering mobile, internet, and digital communication services.

Services:

- Manual and Automated testing, API, Security, Usability, Cross-browser, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

350+ automated regression tests integrated into the CI/CD pipeline, ~50% fewer complaints from clients to support.FULL CASE STUDY

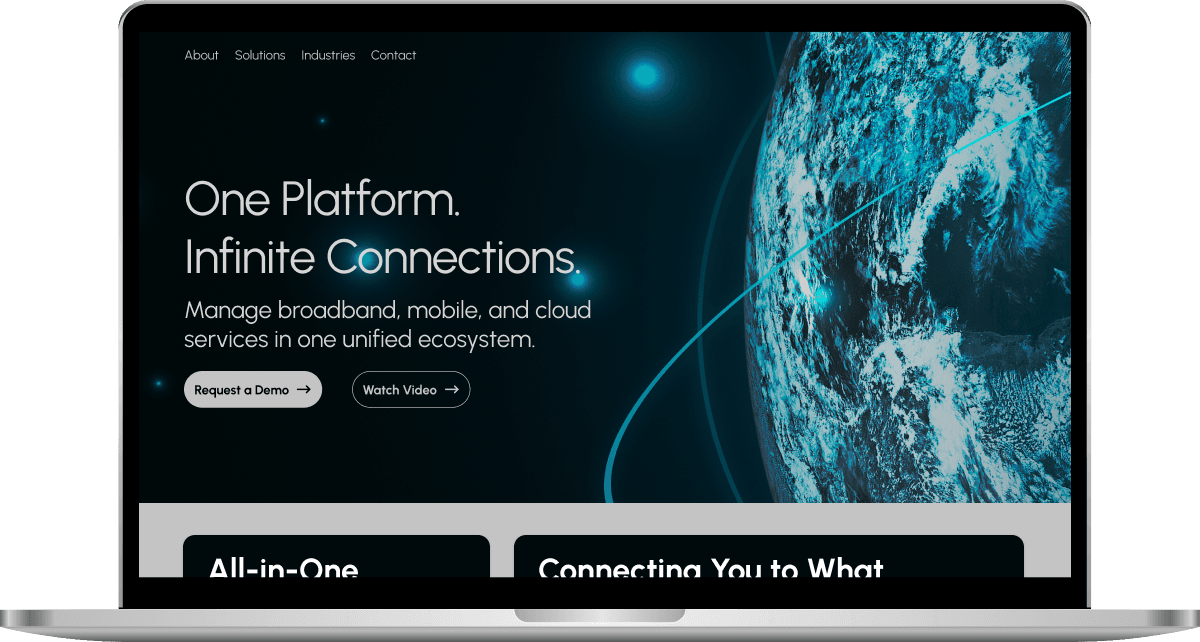

Telecommunications Provider

USA

•Web, Mobile

About project:

The client is a telecommunications provider offering broadband, mobile, and cloud communication services.

Services:

- Manual and Automated testing, API, Smoke, Regression, Performance, Security, Usability, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

~70% of regression tests automated, reducing manual QA's involvement in regression cycles by 60%.FULL CASE STUDY

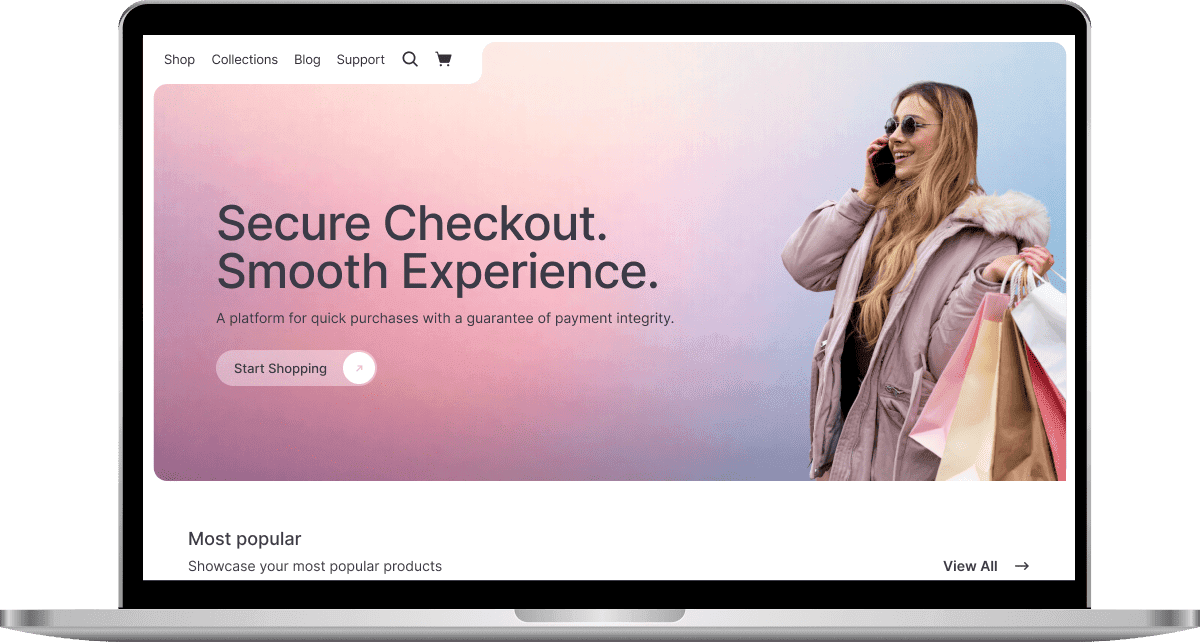

E-Commerce Retailer

USA

•Web, Mobile

About project:

An online E-commerce retailer that provides customers with a seamless online shopping experience through its web and mobile platforms.

Services:

- Manual and Automated testing, API, Usability, Cross-browser, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

~80% drop in user-reported issues, critical checkout errors reduced to near zero, predictable, on-time releases for all major updates.FULL CASE STUDY

Our Clients Say

Get in touch

Our workflow

Now: Just fill out our quick form with your project details. It’s easy and only takes a minute.

In a Few Hours: We’ll assess your information and quickly assign a dedicated team member to follow up, no matter where you are. We work across time zones to ensure prompt service.

In 1 Day: Schedule a detailed discussion to explore how our services can be tailored to fit your unique needs.

Following Days: Expect exceptional support as our skilled QA team gets involved, bringing precision and quality control to your project right from the start.

FAQ

Big Data technologies analyze large volumes of data, identify hidden patterns, determine customer needs, and optimize business processes.

Working with Big Data requires knowledge of fundamental technologies like Hadoop, Spark, NoSQL, etc.

Big data software testing costs vary significantly with each application, influenced by its unique characteristics and testing scope requirements. Factors such as the diversity and number of data sources, architectural components, technology stacks, performance expectations, and the complexity of BI functions and user roles all play a crucial role in determining the overall testing budget. You can contact us for more detailed information and expert solutions that will be proprietary to your project needs.

Big Data is stored on cloud servers or company servers specializing in data processing.

Examples of Big Data include customer information, business sales data, website visitor statistics, health data, and more. These datasets are characterized by their volume, variety, and speed of generation.