- Home

- Case Studies

- Enhancing EdTech AI Platform Quality through Manual Testing

Jul 07, 2025 4 min read

Enhancing EdTech AI Platform Quality through Manual Testing

Platforms:

WebCountry:

EUImplementation time:

Aug 2024 - Jan 2025

Subscribe to Our Newsletter

Stay tuned for useful articles, cases and exclusive offers from Luxe Quality!

About Project

The AI platform is designed to enhance the educational experience by helping users receive structured, customized learning paths and course recommendations. It adapts to individual learning styles and progress, providing a tailored approach to education. Using AI, the system analyzes the entered responses and provides personalized suggestions in real-time.

Before

Before our QA joined the project, the AI system was tested only by developers. There was no test documentation. There was a strong need to validate the consistency of AI output in the UI layer and ensure it aligned with structured logic.

Challenges and Solutions

Challenges

- No test documentation.

- Integration complexity.

- No verification of how AI responses matched user-entered data.

- Lack of structure in reporting and prioritizing issues.

Solutions

- Created detailed test documentation: a test strategy and detailed test plan with test suites.

- Used exploratory testing to validate integrations and data flow between components.

- Validated AI response logic against predefined input rules by designing structured test scenarios and creating input-output patterns for consistency checks.

- Aligned with the team on a straightforward workflow for bug reporting and prioritization.

Technologies, Tools, and Approaches

- Chrome DevTools: Used to analyze frontend behavior and inspect how AI responses render in the DOM.

- Figma: Validated layout and interaction logic against design mockups.

- Jira: Used for bug tracking, task management, and status reporting.

- Postman: Used to test API endpoints connected to AI modules directly, check payload structure, latency, and fallback responses.

- PromptLayer: Used for tracking, analyzing, and debugging AI prompts and responses to ensure consistent and reliable output.

Features of the Project

- AI-driven logic for structured input processing and personalized recommendations.

- Controlled input flow: restriction on invalid or unstructured data.

- Integration of frontend UI with AI modules in real-time.

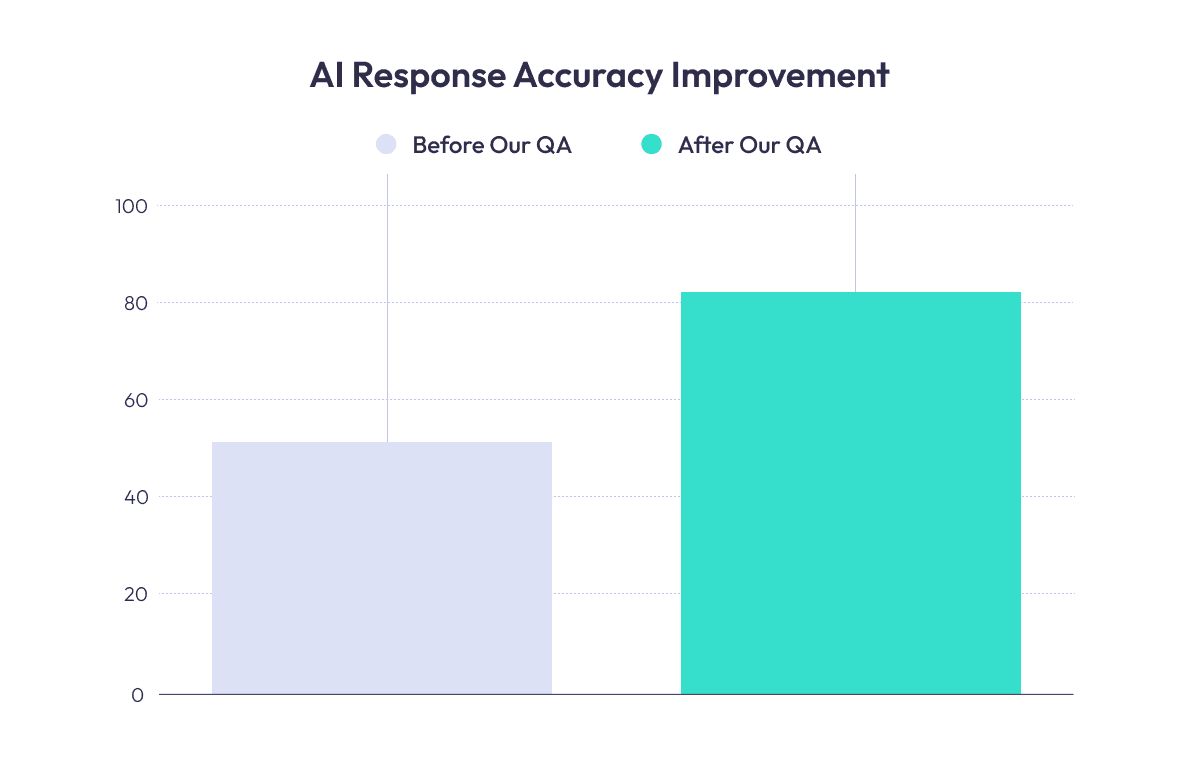

Results

- Up to 300 manual test cases were written to validate structured flows and edge cases.

- Up to 250 bugs were reported.

- Introduced a new bug report format that increased team alignment and issue resolution speed.

- Improved usability of the user interface.

- AI response accuracy improved.

- Manual testing

- Functional testing

- Regression testing

- Exploratory testing

- Smoke testing

- Retest

- DevTools

- Jira

- Postman

Your project could be next!

Ready to get started? Contact us to explore how we can work together.

Other Projects

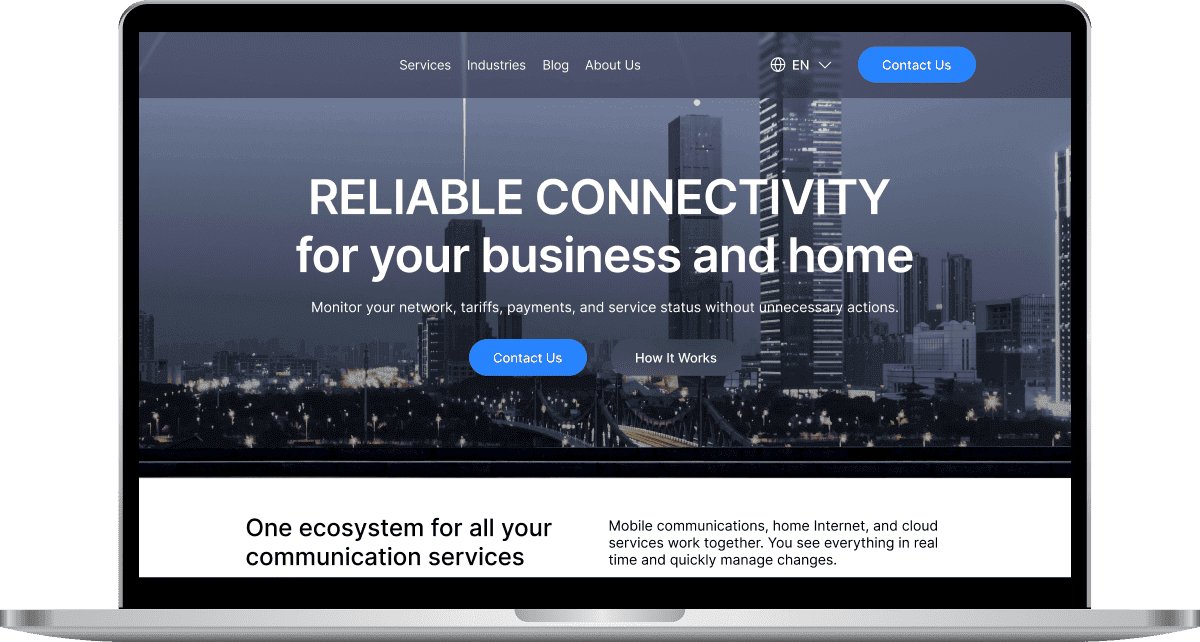

Read moreDigital Connectivity Company

USA

•Web, Mobile

About project:

A digital connectivity company offering mobile, internet, and digital communication services.

Services:

- Manual and Automated testing, API, Security, Usability, Cross-browser, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

350+ automated regression tests integrated into the CI/CD pipeline, ~50% fewer complaints from clients to support.FULL CASE STUDY

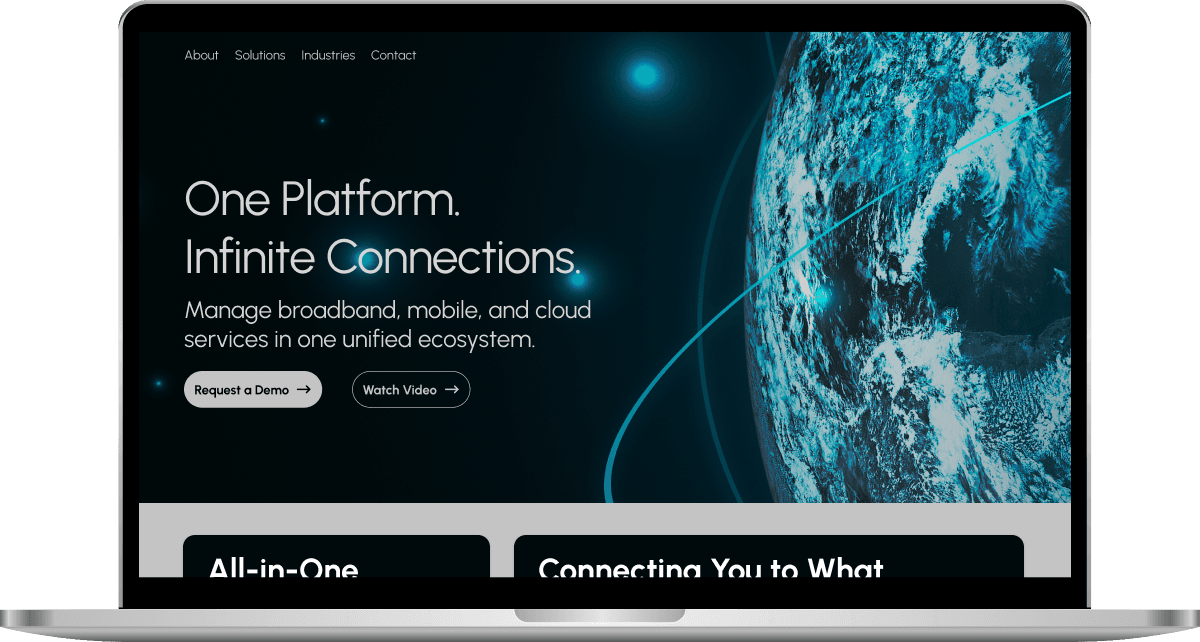

Telecommunications Provider

USA

•Web, Mobile

About project:

The client is a telecommunications provider offering broadband, mobile, and cloud communication services.

Services:

- Manual and Automated testing, API, Smoke, Regression, Performance, Security, Usability, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

~70% of regression tests automated, reducing manual QA's involvement in regression cycles by 60%.FULL CASE STUDY

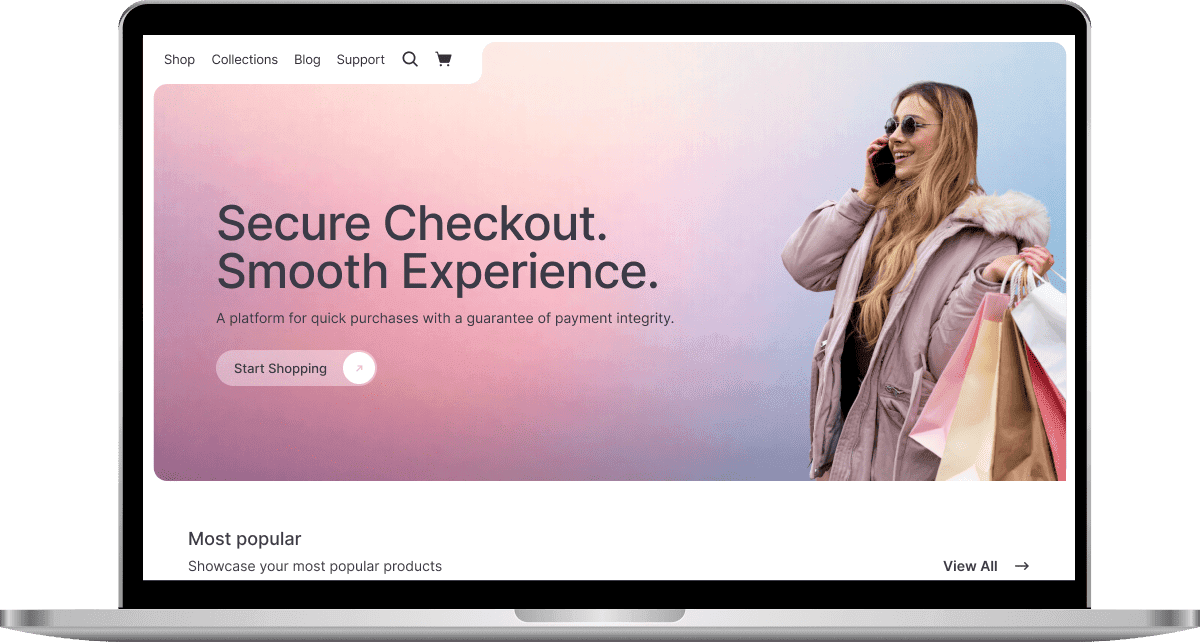

E-Commerce Retailer

USA

•Web, Mobile

About project:

An online E-commerce retailer that provides customers with a seamless online shopping experience through its web and mobile platforms.

Services:

- Manual and Automated testing, API, Usability, Cross-browser, Cross-platform testing

- Automated testing -TypeScript + WebdriverIO + Mocha + Appium

Result:

~80% drop in user-reported issues, critical checkout errors reduced to near zero, predictable, on-time releases for all major updates.FULL CASE STUDY